Hannes Heikinheimo

Sep 19, 2023

1 min read

As voice user interfaces are still quite a new thing, designers can easily go wrong when thinking of building their first voice application. In this blog post, I'll give you three basic principles that can improve the user experience on any voice user interface. Last, I'll show you an example of a simple voice application that doesn't suffer from these typical issues.

For more design guidelines and best practices, you can refer to our Design Guidelines

Conversational AI is a hot buzzword, but people most often don't want to conversate with their computers. Most if not all of us have better friends, even though we often spend more time with our computers and mobile phones than any of them.

Most often conversational AI refers to chatbots. Google defines chatbots as "a computer program designed to simulate conversation with human users, especially over the Internet". These can be voice or text-based, but the key is that is bidirectional.

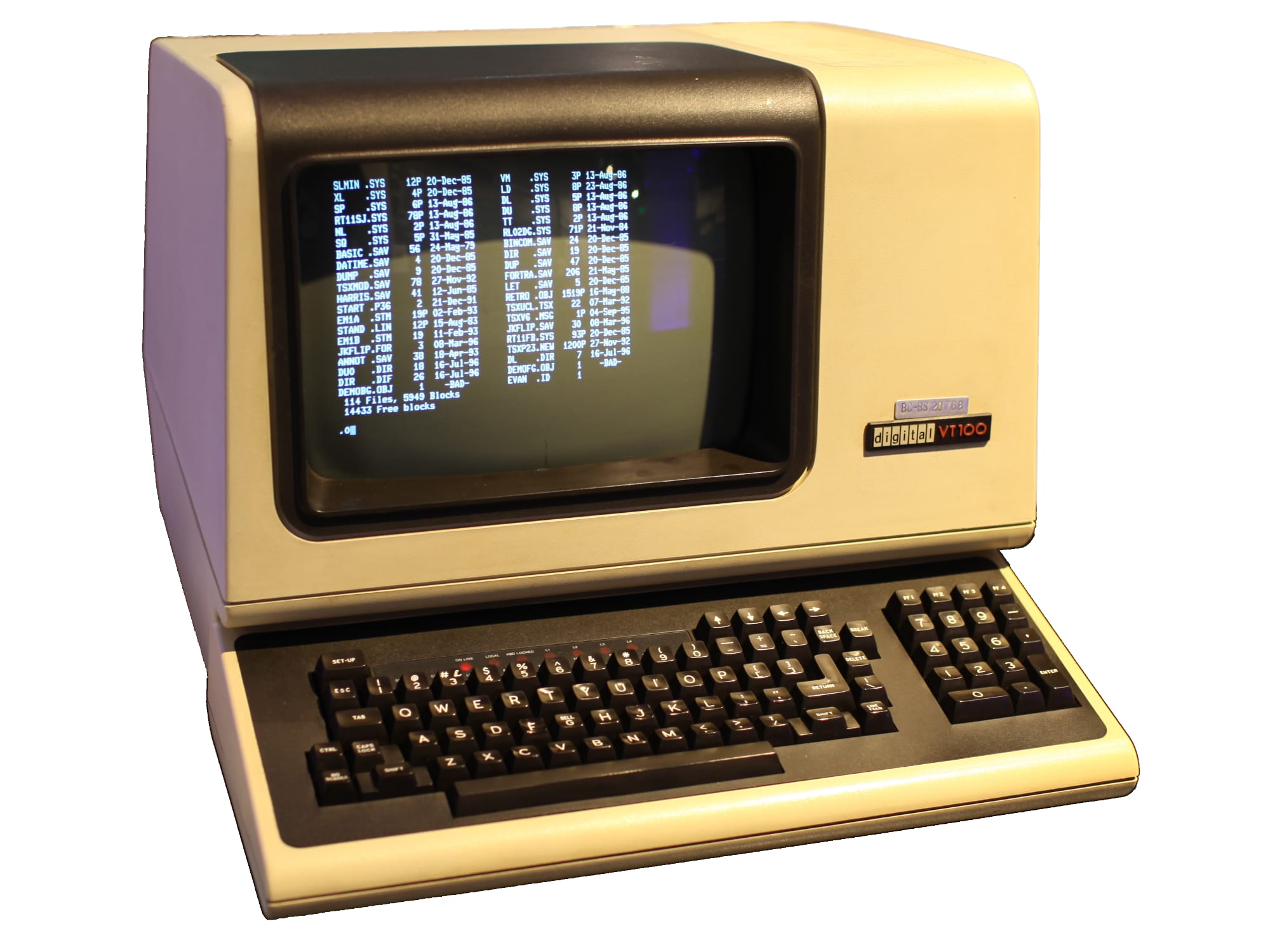

As you can see, it's pretty tedious. Some might call it "conversational", but to me, it's closer to these early user interfaces I referred earlier. The differences with a chatbot and a text-based UI are that instead of commands, you write natural (or almost natural) language, and instead of one command, your order progresses slowly by the UI asking details about the order one at a time. While that might be easier to learn initially, it's a lot slower when you do that for the tenth time.

So point number 1, your users don't want to converse. They want to achieve something and they want to do that with minimal effort and time.

This point is rather obvious, but still often misunderstood especially when it comes to voice. The most common voice user interface that people use in their every-day life are smart speakers and while they are quickly gaining popularity, they are not the best examples of voice user interfaces.

Modalities in user interaction context are defined as single independent channels of sensory input/output between the computer and its user. Examples of modalities include vision (display), touch, voice (both for input and output), etc. Most computer systems support many modalities at once – for example, you are currently reading this by using a display, but you can also select parts of the text by using touch or a mouse.

Voice assistants, on the other hand, only support voice. You can command them by using voice and they answer by using voice. While this works great in some settings, especially in-car where users' hands are not usable and they should be looking at the road, not a display. The problem with this approach is that voice is a pretty slow way of transmitting information.

Let's say you'd like to know the 10 biggest cities in the US and their growth rate. This is it in a visual form.

| Rank | City | State | Census 2019 | Change since 2010 |

|---|---|---|---|---|

| 1 | New York | New York | 8,336,817 | 0.0198 |

| 2 | Los Angeles | California | 3,979,576 | 0.0493 |

| 3 | Chicago | Illinois | 2,693,976 | −0.06% |

| 4 | Houston | Texas | 2,320,268 | 0.1048 |

| 5 | Phoenix | Arizona | 1,680,992 | 0.1628 |

| 6 | Philadelphia | Pennsylvania | 1,584,064 | 0.038 |

| 7 | San Antonio | Texas | 1,547,253 | 0.1656 |

| 8 | San Diego | California | 1,423,851 | 0.0891 |

| 9 | Dallas | Texas | 1,343,573 | 0.1217 |

| 10 | San Jose | California | 1,021,795 | 0.0802 |

But now think of how you would say this so that the person listening would also comprehend it? Not very simple and it would easily take a minute or two to even say out loud.

Other common use case where multiple modalities work great is choosing from several options. If you would want to see details about one of these cities, you could say something like "Show more information about number 4 (or Houston)", but even easier would be to just click on Houston and see more information.

There are tasks where voice works great and there are tasks where something else works great. Don't force your users to listen to long speeches but use display whenever possible.

So point 2: always support all relevant user interaction modalities in your app.

People tend to think that the problem with voice user interfaces has to do with the ASR (automatic speech recognition, transcribing speech to text) accuracy or in general with how the technology can perform. Of course, sometimes there are issues with how the system understands the commands, but it's also a human problem. We just are not very good at expressing more complex ideas in exact and concise language.

Let's say you would have a general artificial intelligence voice assistant that would be able to comprehend and do pretty much everything, but it would still not know how to play rock-paper-scissors. It's not very hard to teach this game to a 5-year old, but try coming up a voice command that would do that. Think of something like "Hey Alexa, let's play a game where we both pick one of rock, paper, or scissors. The scissors would beat paper but lose to rock and the paper would beat rock..."

Even though the machine could transcript all words you utter perfectly, it would be very hard to express the rules of the game in concisely in one utterance. This is one reason why smart speakers don't often get your intentions right. It's not that they didn't understand or that they heard wrong but rather you expressed yourself wrong. You thought that the smart speaker would be smart enough to understand that when you say "turn off the lights", you meant "turn off the lights that I want to turn off" or "turn off all the lights in my immediate vicinity but not the garage door light I always keep on or upper floor toilet light that is on because someone is currently using it". It's not.

Voice user interfaces should support this ambiguous and often contradicting way we humans use language. Show the user constantly what's happening and support many ways for expressing the same things. Make sure your application works the same even if the order of commands is different. Keep track of user context in application and refer to that.

In our rock-paper-scissors game, the more natural way of expressing the rules would be in short steps. Something like:

There are two players who pick one of three options: rock, paper or scissors" "OK" "The players choose their option on a count of three" "A-ha" "Rock beats scissors but loses to paper" "Got it" "Scissors beat paper but lose to rock" "Understood" "And finally paper beats rock but loses to scissors" "Perfect" "If both players pick the same option, it's a tie" "Sure

Point 3: don't expect your users to use multi-word commands but rather split these into subtasks

We saw earlier an example of ordering a pizza by using a chatbot. It wasn't very quick, but how it should be done?

Here's a quick example to show how a pizza could be ordered by using a multi modal voice user interface. The application listens to orders when the microphone is activated and shows new items appear in cart in real time. If the user wants to modify an order, for example to edit the toppings, they click on an item to modify it.

The application gives enough context for the user but doesn't clutter the view with extra elements. It doesn't require users to chat with the computer, but rather waits for user commands and obeys them as accurately and quickly as possible.

And by the way, building and prototyping voice user interfaces doesn't need to be that hard. With Speechly, you can create your first application and try it out in a few minutes. Check out our video Quick Start and sign up to our Dashboard and start prototyping!

Speechly is a YC backed company building tools for speech recognition and natural language understanding. Speechly offers flexible deployment options (cloud, on-premise, and on-device), super accurate custom models for any domain, privacy and scalability for hundreds of thousands of hours of audio.

Hannes Heikinheimo

Sep 19, 2023

1 min read

Voice chat has become an expected feature in virtual reality (VR) experiences. However, there are important factors to consider when picking the best solution to power your experience. This post will compare the pros and cons of the 4 leading VR voice chat solutions to help you make the best selection possible for your game or social experience.

Matt Durgavich

Jul 06, 2023

5 min read

Speechly has recently received SOC 2 Type II certification. This certification demonstrates Speechly's unwavering commitment to maintaining robust security controls and protecting client data.

Markus Lång

Jun 01, 2023

1 min read