Hannes Heikinheimo

Sep 19, 2023

1 min read

Blog

The rapid rise of multiplayer online gaming has resulted in video games becoming social experiences. Voice chat has become an important communication channel to facilitate this social experience, but also the top channel for toxic behavior. Luckily there are 5 AI technologies to help overcome this toxicity.

Voice chat has become very popular in games. The rise of multiplayer games has influenced this trend, but the larger impact has become the evolution of online games into social experiences. Nearly half of the players say they like games better with voice chat, and over 68% use the feature.

Game makers also appreciate voice chat because it can help build stronger bonds between players and deliver better retention, longer sessions, and more frequent play. For many game makers, this translates into higher average revenue per user (ARPU).

However, we also know that voice chat is the biggest source of toxic behavior, far exceeding text chat, in-game play, and user-generated content. Those toxic incidents have direct negative impacts on the players, playing time, playing frequency, and sentiment toward the game. While game makers have had tools for monitoring toxic behavior in games and through text chat for some time, voice chat is largely a free-for-all.

The solution to this is AI-enabled voice chat moderation. It is not uncommon for game industry professionals to be aware of some AI-based tools in use for text chat moderation, and others may have heard of similar technology for voice chat. However, most people don’t know that effective voice chat monitoring for gaming requires multiple AI-based capabilities.

Traditional moderation tools favor simplistic approaches such as keyword spotting. This technique is common for text chat moderation, and some people have tried it for voice chat. It can help you flag or redact a known list of bad words, but you are constantly chasing the novel bad word and just trying to catch up with the bad actors. And this approach will miss many toxic incidents while flagging some that are actually benign.

The issue is context. The same word could be viewed as toxic or appropriate depending on what is happening in the conversation or the game. For example, "I'm going to kill you!” could be flagged as a threat of physical violence. However, this could also be a key objective of the game based on combat. Similarly, someone may say they are going to plant a bomb at the courthouse. Should the game maker notify law enforcement, or is it a known strategy in the video game?

Both of these comments could result in a false positive result. That is when the system identifies a toxic incident when the comments are perfectly within the bounds of acceptable behavior for the game. False positives can be detrimental to a game because they can lead to false accusations and unjust penalties for players that are acting entirely in good faith.

Misunderstandings can also arise from sarcasm, cultural differences, and misinterpreted accents. This is why custom AI models are often essential for voice chat moderation. It is also why a single technique generally doesn’t get the job done.

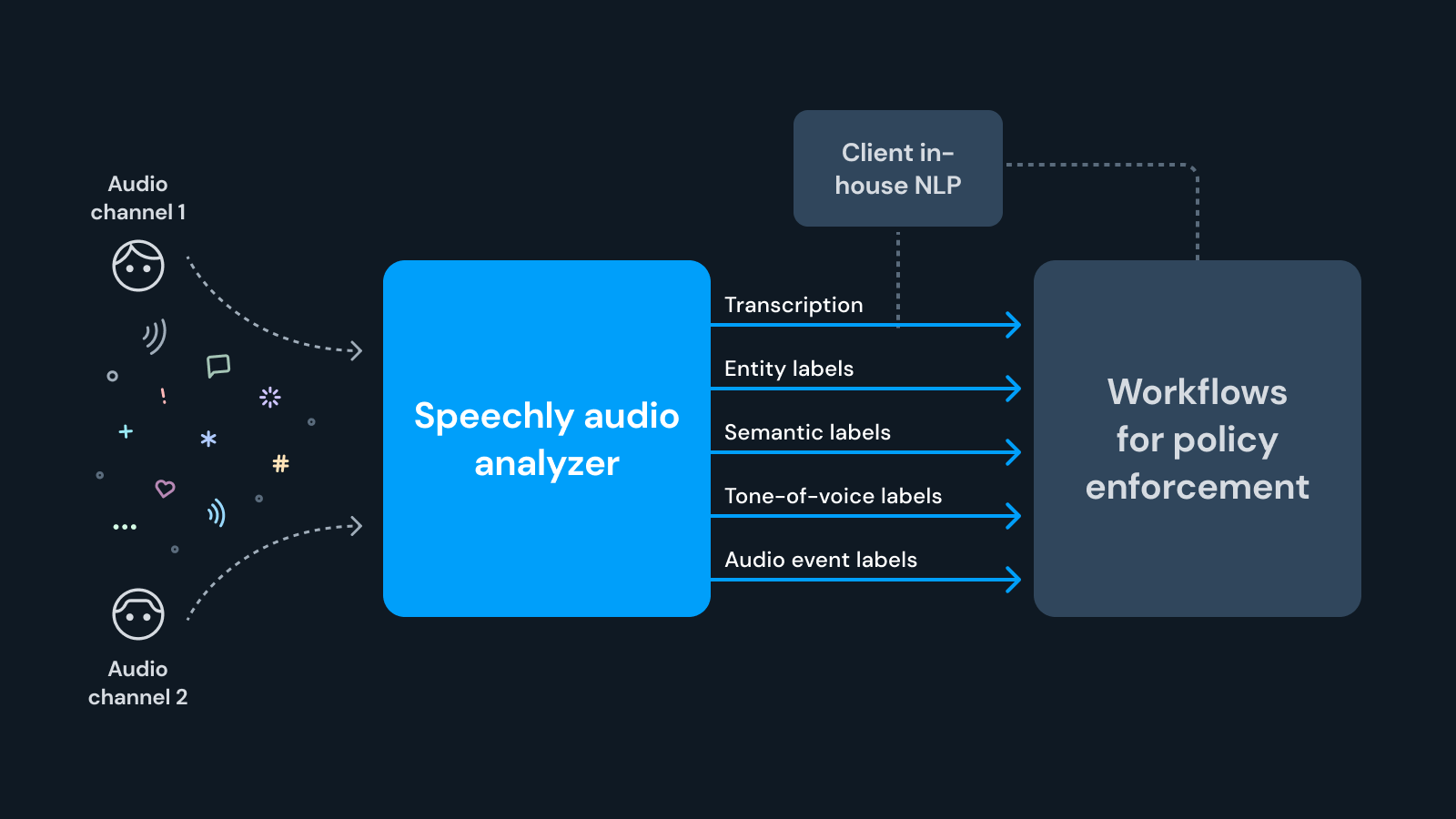

Speechly was asked by several large game makers how to address voice chat toxicity without missing true positives or generating false positives. As we analyzed the problem, we were able to identify five techniques in three categories that can help to finally fill the voice chat moderation gap.

The first category is accurately identifying what was said. This revolves around the transcript and identifying entity labels. The second category is related to meaning and includes semantic labels and tone-of-voice labels. Finally, there are other signals that are not words and are known as audio event labels.

It is understandable that game makers would first look to what worked for them in text chat when considering how to address toxicity in voice chat. They usually figure out quickly that these techniques fall far short of meeting their moderation and mitigation objectives. The optimal solution involves a portfolio of AI-based features used in concert.

If you would like to learn more about any of these AI-driven techniques, feel free to contact our product team using our Contact Form.

Speechly is a YC backed company building tools for speech recognition and natural language understanding. Speechly offers flexible deployment options (cloud, on-premise, and on-device), super accurate custom models for any domain, privacy and scalability for hundreds of thousands of hours of audio.

Hannes Heikinheimo

Sep 19, 2023

1 min read

Voice chat has become an expected feature in virtual reality (VR) experiences. However, there are important factors to consider when picking the best solution to power your experience. This post will compare the pros and cons of the 4 leading VR voice chat solutions to help you make the best selection possible for your game or social experience.

Matt Durgavich

Jul 06, 2023

5 min read

Speechly has recently received SOC 2 Type II certification. This certification demonstrates Speechly's unwavering commitment to maintaining robust security controls and protecting client data.

Markus Lång

Jun 01, 2023

1 min read