Hannes Heikinheimo

Sep 19, 2023

1 min read

As the internet has matured, so have the available content types that users can enjoy and approaches for moderating online activity. Most of the early content online was dominated by text and visual based content where audio played a smaller role. Flash forward to the 2020s and you see a very different ecosystem that is dominated by images, videos, and even real time communication channels such as Clubhouse, Twitter spaces or the various Metaverse universes like Roblox that enable Voice Chat.

Since voice-based moderation has received less attention over the years than text-based moderation, this post will outline how to use Speech to Text technology to efficiently and effectively moderate Voice Chats and Voice-based content online. We will focus on keyword detection based moderation in which the task is to find specific instances of bad words (or short phrases) from a users’ speech.

We interviewed 20+ experts in the Online Gaming & Metaverse space. Here are the key takeaways for Voice Moderation.

In order to build a Voice Moderation solution, you will need to use Speech to Text technology. Speech to Text does exactly as the name implies - it converts a user's speech into text that can be used for various downstream tasks, such as Moderation. It’s important to know that Speech to Text is a Machine Learning technology which means that even when it is trained for a specific industry, there can still be instances where the system will make a mistake. This means that on occasion it will “hear” something else than what was actually said.

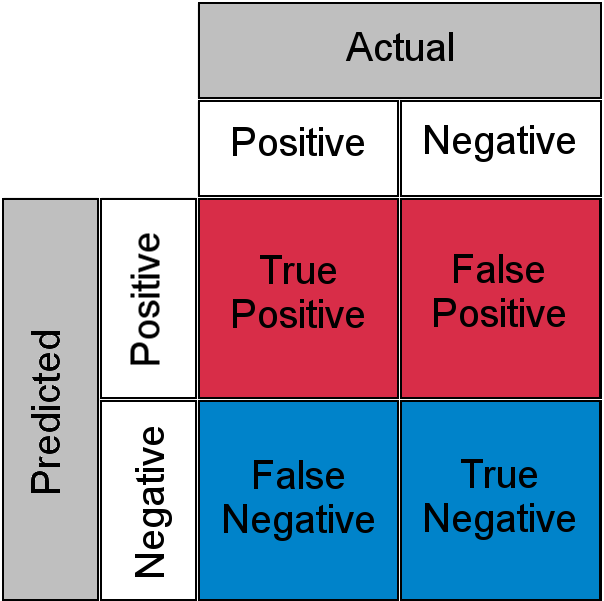

These Speech to Text mistakes may require special attention in the context of Voice Moderation, because inappropriately implemented moderation can be detrimental to the user experience. The moderation system should not miss obvious cases of toxicity, but accusing innocent users of bad behavior too often can be even more harmful. For this reason, it is important for businesses to consider the tradeoffs in building a Moderation solution that is super strict vs more tolerant. Doing this requires an understanding of Speech to Text False Negatives and False Positives.

False Negatives and False Positives are the most important variables to consider when using Speech to Text technology to build a Voice Moderation solution.

Regardless of how accurate the Speech to Text system is, it is impossible to completely avoid the scenario of False Negatives and False Positives. However, what businesses do have control over is the sensitivity of their Speech to Text. With this decision in Speech to Text sensitivity comes moderation trade offs to consider between False Negatives and Positives.

There is a direct trade off with Speech to Text False Negatives and False Positives. If you reduce one variable, the other is going to increase. To better understand this, let's look at an example using a Human Moderator and 2 different scenarios.

When the Moderator hears profanity, assume there are 2 levels of confidence to gauge how well they heard the word: HIGH and LOW.

The Moderator has 2 Possible Scenarios:

In this scenario, the Moderator will flag less often as they will be more selective in the flagging process.

In this scenario, the Moderator will flag more frequently as they will be less strict in the flagging process.

In this situation, the business has to decide whether to be more or less strict in their moderation flagging process.

The tradeoff decision made in this Human Moderator example is exactly how you should look at tradeoffs with Speech to Text for Moderation!

For each level of False Negatives there is a matching level of False Positives. This is called the Operating Point of the system. Finding the ideal Operating Point with Speech to Text is the main goal when it comes to Moderation, however where this Operating Point will exist is determined by the context of an individual business and their goals.

For example, if no profanity is tolerated, you might opt for a higher False Positive rate, where flagging is then verified by a human in the loop. Alternatively, if you would like to fully automate moderation and do not have the resource to verify every flag with a human, you would opt for a smaller False Positive rate. (But may have to tolerate more False Negatives as a consequence.)

In order to use Speech to Text for Voice Moderation, you need a solution that can be adjusted to target the specific type of language that is relevant to your business and use case. By using a dedicated Speech to Text model that is trained with data that is relevant for your use case, Speechly can reduce false negatives without adversely affecting the false positive rate.

If you would like to learn more about how Speechly’s Speech to Text technology can be adapted to target specific jargon from your business or industry, reach out to the team with our Contact Us form.

Cover Photo by Karolina Grabowska: Pexels

Speechly is a YC backed company building tools for speech recognition and natural language understanding. Speechly offers flexible deployment options (cloud, on-premise, and on-device), super accurate custom models for any domain, privacy and scalability for hundreds of thousands of hours of audio.

Hannes Heikinheimo

Sep 19, 2023

1 min read

Voice chat has become an expected feature in virtual reality (VR) experiences. However, there are important factors to consider when picking the best solution to power your experience. This post will compare the pros and cons of the 4 leading VR voice chat solutions to help you make the best selection possible for your game or social experience.

Matt Durgavich

Jul 06, 2023

5 min read

Speechly has recently received SOC 2 Type II certification. This certification demonstrates Speechly's unwavering commitment to maintaining robust security controls and protecting client data.

Markus Lång

Jun 01, 2023

1 min read