Hannes Heikinheimo

Sep 19, 2023

1 min read

The Web Speech API is an experimental browser standard that enables web developers to effortlessly process voice input from their users. Its simple API can turn on the device's microphone and apply a speech-to-text algorithm to convert whatever the user says into text that the web app can process. At first glance, it seems to open the door to voice-enabled web apps.

However, browser support for this API is limited. At the time of writing, the majority of its support is centralised in browsers made by Google, who authored much of the API's specification. Indeed, the only browsers that do support it are owned by big tech companies that have the scale to afford to include a free speech-to-text service. Apple has recently joined Google in offering a Siri-based equivalent in Safari.

This has a couple of consequences. Firstly, web apps that use this API have a fragmented experience across browsers. One example is Duolingo, which only offers its voice exercises on Chrome. Indeed, even amongst the browsers that do offer the API, the speech-to-text algorithm differs between them, resulting in different transcriptions and different user experiences between browsers. For example, these are some ways different implementations of the API could yield different results:

Secondly, there is a trust factor. Developers using the API probably don't realise that they are sending their users' voice data to a service owned by a big tech company like Google. They may assume that the transcription algorithm runs on the device when it is in fact performed in the cloud. Owning the browser and the speech recognition service also gives these companies the power to make arbitrary changes to the API, including turning it off, as well as lock out other browser vendors. An example is Brave, a browser based on Chromium, which is unable to use Google's speech recognition service due to restrictions imposed by Google. Such restrictions widen the feature gap between browsers like Chrome and the rest of the field.

Browsers do not have to be limited to using the speech recognition services owned by Google and Apple. There are more widely supported browser standards like the Media Streams API that can enable developers to stream audio data from a microphone to any service. The Web Speech API can be replicated by building code on top of these APIs, escaping the vendor lock-in imposed by the browser's native choice of speech recognition service. Indeed, it can be replicated on browsers that don't support it in the first place.

Code that implements missing browser functionality like this is called a polyfill. The good news is that there exists a polyfill for the Web Speech API that uses Speechly’s speech recognition service under the hood. Any web app using this polyfill would be able to provide a consistent voice-enabled user experience across all browsers, using an API that the developer has chosen, can configure, and can trust.

The code for the polyfill can be found here. It can be used in isolation, but if you are using React to build your web app, we recommend you combine it with react-speech-recognition for the simplest set-up.

The repositories both include examples of the two libraries working together and full API documentation, but we'll repeat the basic example here to give you a taste.

First, Start developing with Speechly and get an app ID. You can find a quick guide for that here.

Next, install the two libraries in your React app:

npm i --save @speechly/speech-recognition-polyfill

npm i --save react-speech-recognition

We're going to make a simple push-to-talk button component. When held down, it will display a transcript from the microphone. When the button is released, transcription will end. Using your Speechly app ID, create a React component like the following:

import React from 'react';

import { createSpeechlySpeechRecognition } from '@speechly/speech-recognition-polyfill';

import SpeechRecognition, { useSpeechRecognition } from 'react-speech-recognition';

const appId = '<INSERT_SPEECHLY_APP_ID_HERE>';

const SpeechlySpeechRecognition = createSpeechlySpeechRecognition(appId);

SpeechRecognition.applyPolyfill(SpeechlySpeechRecognition);

const Dictaphone = () => {

const { transcript, listening } = useSpeechRecognition();

const startListening = () => SpeechRecognition.startListening({ continuous: true });

return (

<div>

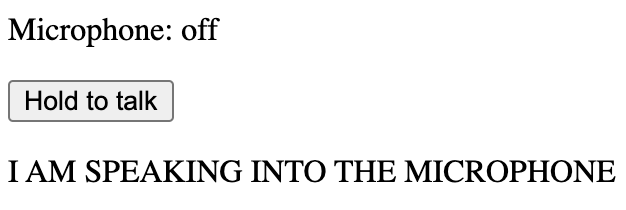

<p>Microphone: {listening ? 'on' : 'off'}</p>

<button

onTouchStart={startListening}

onMouseDown={startListening}

onTouchEnd={SpeechRecognition.stopListening}

onMouseUp={SpeechRecognition.stopListening}

>Hold to talk</button>

<p>{transcript}</p>

</div>

);

};

export default Dictaphone;

Run your web app, hold down the button and speak into your microphone (you may need to give the browser permission to use the microphone first). You should see your speech transcribed like this:

Give it a try and let us know how you get on! If you have any feedback on either library, raise a GitHub issue on the polyfill repository or react-speech-recognition.

Speechly is a YC backed company building tools for speech recognition and natural language understanding. Speechly offers flexible deployment options (cloud, on-premise, and on-device), super accurate custom models for any domain, privacy and scalability for hundreds of thousands of hours of audio.

Hannes Heikinheimo

Sep 19, 2023

1 min read

Voice chat has become an expected feature in virtual reality (VR) experiences. However, there are important factors to consider when picking the best solution to power your experience. This post will compare the pros and cons of the 4 leading VR voice chat solutions to help you make the best selection possible for your game or social experience.

Matt Durgavich

Jul 06, 2023

5 min read

Speechly has recently received SOC 2 Type II certification. This certification demonstrates Speechly's unwavering commitment to maintaining robust security controls and protecting client data.

Markus Lång

Jun 01, 2023

1 min read