Hannes Heikinheimo

Sep 19, 2023

1 min read

New with Speechly? Please take a look at Quick start to learn how to get started or see our Integrations documentation for simple integration tutorials!

The goal of this blog post is to show how you can add voice user interface to any web application. As an example, we will be building a simple image editing web application that can be used by voice. You can see the application in action at https://speechly.github.io/photo-editor-demo/ and the source code at https://github.com/speechly/photo-editor-demo.

More specifically, in this tutorial, you learn how to:

Let’s start with the most fun part and configure the Speechly application.

First of all, you will need access to the Speechly dashboard. You can get access by joining the waitlist.

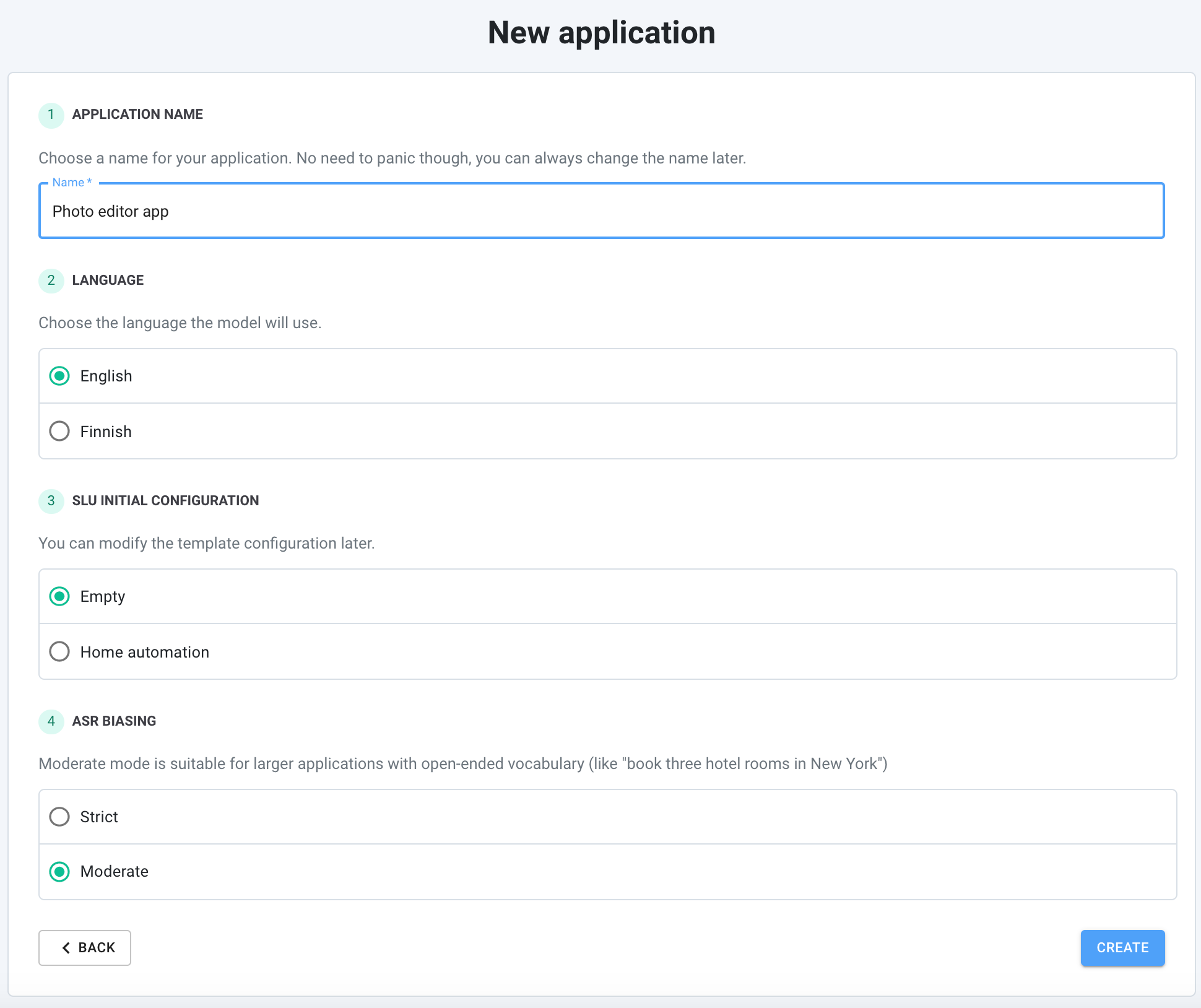

Next, you can proceed by creating a new application. You start by naming it, and choosing the language. For the “Template”, choose “Empty”, and for the “ASR Biasing”, choose “Moderate”. Now click “Create” to proceed to the configuration view.

In the configuration view, let’s start by adding the Speechly Annotation Language (SAL) templates to the configuration. You can read more about the SAL by visiting our documentation.

Let’s add the following features to our editor:

In our simple photo editor, a filter is something that is either “on” or “off”, while properties such as brightness have a numeric value that can be increased or decreased. These features could thus be mapped to the following intents:

Since there are multiple options for filters, let’s take a filter entity in use. Similarly, there are several properties whose value can be changed; therefore, we’ll also add a property entity.

For the filters, let’s add the following SAL templates to our configuration:

filter = [vintage|faded|sepia|classic|kodachrome|technicolor|polaroid|black and white|grayscale]

add_filter_cmd = [add | activate | make it | make it look | make it like]

del_filter_cmd = [remove | deactivate]

…

*add_filter $add_filter_cmd $filter(filter)

*remove_filter $del_filter_cmd $filter(filter)

The variable filter refers to a list of names for different filters. The variables add_filter_cmd and del_filter_cmd are lists of commands, which can be used for adding or removing filters to an image. The utterance *add_filter $add_filter_cmd $filter(filter) combines the both lists. Users could say, for example, “Make it look classic” or “Activate black and white” to have this intent detected. $filter(filter) has its entity name in parentheses, which enables the Speechly API to recognize “vintage” as an entity value of “filter” when the user says “Make it look vintage.” Upon receiving the utterance, the API returns both the add_filter intent as well as the filter entity with value vintage. With this information, our future application can add the desired filter effects to the image.

For changing the values of the image properties, we can go with the following templates:

property = [brightness | luminosity | light | contrast | saturation | color]

increase_cmd = [increase|add more|more]

decrease_cmd = [decrease|reduce|less]

…

*increase $increase_cmd $property(property)

*decrease $decrease_cmd $property(property)

Here we define the changeable properties in the property variable and the commands to adjust those properties in the variables increase_cmd and decrease_cmd. Then we combine them into utterances, which allow us to detect the *increase and *decrease intents as well as the property entity when a user says phrases like “Increase saturation” or “Decrease contrast”.

The last intent is “undo”, and it is quite straightforward:

*undo [go back | go one step back | undo | i don't want that | no i don't want that | cancel that | cancel]

When a user says one of those commands, the *undo intent can be detected. Since we don’t have any parameters for the undo action, we don’t need to add any entities.

Let’s put it all together now:

filter = [vintage|faded|sepia|classic|kodachrome|technicolor|polaroid|black and white|grayscale]

property = [brightness | luminosity | light | contrast | saturation | color]

increase_cmd = [increase|add more|more]

decrease_cmd = [decrease|reduce|less]

add_filter_cmd = [add | activate | make it | make it look | make it like]

del_filter_cmd = [remove | deactivate]

*undo [go back | go one step back | undo | i don't want that | no i don't want that | cancel that | cancel]

*add_filter $add_filter_cmd $filter(filter)

*remove_filter $del_filter_cmd $filter(filter)

*increase $increase_cmd $property(property)

*decrease $decrease_cmd $property(property)

*increase $increase_cmd $property(property) {and} *increase $increase_cmd $property(property)

*decrease $decrease_cmd $property(property) {and} *decrease $decrease_cmd $property(property)

*increase $increase_cmd $property(property) {and} *decrease $decrease_cmd $property(property)

*decrease $decrease_cmd $property(property) {and} *increase $increase_cmd $property(property)

*add_filter $add_filter_cmd $filter(filter) {and} *increase $increase_cmd $property(property)

*add_filter $add_filter_cmd $filter(filter) {and} *decrease $decrease_cmd $property(property)

*increase $increase_cmd $property(property) {and} *add_filter $add_filter_cmd $filter(filter)

*decrease $decrease_cmd $property(property) {and} *add_filter $add_filter_cmd $filter(filter)

Once you’ve added the SAL templates, including all the intents and entities, to the configuration, you can click “Deploy” to start deploying your Speechly application. It may take a while, but once it’s ready, you can try it out in the Speechly Playground by clicking the “Try” button.

In the playground, you should verify that you get the correct intents and entities - at least for those utterances that you have defined in your configuration. Should you not get desired results, try speaking with a different speed (avoid too slow and too fast tempo), and make sure that your microphone is working properly.

Feel free to modify the configuration, but make sure you use the same intent and entity names we defined here.

This tutorial uses React for building the application, but the Speechly browser-client can be used with other Javascript frameworks or vanilla javascript too. So please keep reading even if you are not familiar with React! The tutorial will be useful even also if you plan to use some other framework.

Let’s create our React application:

npm install -g create-react-app

create-react-app speechly-image-editor --template typescript

cd speechly-image-editor

Now, let’s add our dependencies:

npm install --save @speechly/browser-client

npm install --save fabric // for the image manipulation operations

To save some time, here’s a pre-built code to our app:

cd src/

curl -o Mic.tsx https://raw.githubusercontent.com/speechly/photo-editor-demo/master/src/Mic.tsx

curl -o ConnectionContext.tsx https://raw.githubusercontent.com/speechly/photo-editor-demo/master/src/ConnectionContext.tsx

curl -o CanvasEditor.ts https://raw.githubusercontent.com/speechly/photo-editor-demo/master/src/CanvasEditor.ts

curl -o layout.css https://raw.githubusercontent.com/speechly/photo-editor-demo/master/src/layout.css

Mic.tsx is the microphone component that listens to the audio in the browser, ConnectionContext.tsx is the component that handles the connection to the Speechly API, CanvasEditor.ts is a minimal image editor, and layout.css contains the styling for the application. Feel free to check them out for the implementation details!

Let’s start by adding the following code to src/App.tsx:

import React, { useEffect, useRef, useState } from 'react';

import './layout.css';

import { Mic } from './Mic';

import { CanvasEditor } from './CanvasEditor';

import ConnectionContext, {

ConnectionContextProvider,

} from './ConnectionContext';

const App = () => {

const imageEditorRef = useRef<HTMLDivElement>(null);

const transcriptDivRef = useRef<HTMLDivElement>(null);

const [imageEditorInstance, setImageEditorInstance] =

useState<CanvasEditor>();

const [imagePath, setImagePath] = useState<string>();

const appId = process.env.REACT_APP_APP_ID;

if (!appId) {

throw new Error('REACT_APP_APP_ID environment variable is undefined!');

}

const language = process.env.REACT_APP_LANGUAGE;

if (!language) {

throw new Error('REACT_APP_LANGUAGE environment variable is undefined!');

}

// create image editor here

return (

<div>

<section className="photo">

<div ref={imageEditorRef} />

</section>

<section className="app">

<div ref={transcriptDivRef} />

</section>

<section className="controls">Microphone here</section>

</div>

);

};

export default App;

REACT_APP_APP_ID and REACT_APP_LANGUAGE are the environment variables, which are used as your Speechly app ID and your chosen language. You can find your app ID and language code in the Speechly dashboard, where you configured your app in chapter 1.

You can run the application with the correct app id and language like this:

$ export REACT_APP_APP_ID="your-app-id"

$ export REACT_APP_LANGUAGE="en-US"

$ npm run start

Next, let’s create an instance of the image editor. Add the following code to the App function, just before its return statement:

const [width, height] = useWindowSize();

useEffect(() => {

if (!imageEditorInstance && imagePath) {

const editor = new CanvasEditor(

imageEditorRef.current as HTMLDivElement,

imagePath as string,

);

setImageEditorInstance(editor);

}

}, [imageEditorRef, imagePath, imageEditorInstance]);

It does not yet have any effect, because the imagePath is not initialized. To keep things simple, just add an image to the public*/* folder and use the image name as the initial value for imagePath.

const [imagePath, setImagePath] = useState<string>('pic.jpg');

In this example pic.jpg refers to the image in public/pic.jpg.

Let’s then add the microphone to the page, and connect it to the Speechly API. The connection is handled by the Speechly browser client library. You can check browser-client-example repo for a simple example on how to use the Speechly browser client.

Let’s save us some more time and get more pre-built code:

curl -o speechlyTools.ts https://raw.githubusercontent.com/speechly/photo-editor-demo/master/src/speechlyTools.ts

This file contains the mappings between the SAL intents and the image editor actions. Let’s take a more detailed look at the updateImageEditorBySegmentChange function.

This function is triggered every time the app receives a response from the Speechly API, for instance, when the API detects a new intent or entity in the voice input; or simply, when a user utters a new word. The voice input is divided into segments. A segment encapsulates related words, intents and entities in the speech until the point in time the user stops speaking or proceeds to express a new intent. For example, the utterance “Make it look vintage, and decrease the brightness” is split into two segments:“Make it look vintage” and “decrease the brightness”. You can find out more about segments in the documentation. For our image editing application, we are specifically interested in the intents and the entities that define which actions we want to execute.

Now, let’s take a look at the code in updateImageEditorBySegmentChange. It checks whether the segment is not going to change anymore (isFinal), and calls one of the imageEditor functions, depending on the user intent.

For example, if intent.intent === "add_filter", we need to find and apply a filter. To find out which filter needs to be applied, we can check the entity values in that segment:

const collectEntity = (entityList, entityType: string) => {

const entities = entityList.filter(item => item.type === entityType);

if (entities.length > 0) {

// In our case there should only be a single entity of a given type in a segment,

// so we just return the first item on the list if it exists.

return entities[0].value.toLowerCase();

}

return '';

}

…

// filter name can be vintage, faded, sepia…

const filterName = this.collectEntity(segment.entities, "filter");

Then we need to verify that we can handle the requested filter. For example, “black and white” is called “grayscale” in the image editor, so we use entity2canonical mapping to look it up. After that we call the enableFilter function of the image editor, and the filter is applied!

if (filterName in this.entity2canonical) {

imageEditor.enableFilter(this.entity2canonical[filterName]);

}

Similarly, when intent.intent === "increase", we call incrementProperty function of the image editor to change the property value. To find out which property the user wants to change, we use the property entity:

const propertyName = this.collectEntity(segment.entities, 'property');

There are also specific conditions for remove_filter and decrease intents.

Now, let’s replace the “Microphone here” placeholder in src/App.tsx with the following code:

<ConnectionContextProvider

appId={appId}

language={language}

imageEditor={imageEditorInstance as CanvasEditor}

transcriptDiv={transcriptDivRef.current as HTMLDivElement}

>

<ConnectionContext.Consumer>

{({ stopContext, startContext, closeClient, clientState }) => {

return (

<div>

<Mic

onUp={stopContext}

onDown={startContext}

onUnmount={closeClient}

clientState={clientState}

classNames="Playground__mic"

/>

</div>

);

}}

</ConnectionContext.Consumer>

</ConnectionContextProvider>

Now you should be able to launch the app by using your own Speechly app ID and see the page with an image and the microphone button.

Try using the same utterances you used in chapter 1 in the Speechly playground.

You can say, for example:

In this blog post, we walked you through how to set up a photo editor web application that can be controlled by voice, with the help of the Speechly SLU and React. We configured the application in the Speechly dashboard using the SAL, deployed it, and tried it out in the Speechly playground. We then put together our own simple image editor. You can take a look at the live demo and the source code of this app for more details. Feel free to contact Speechly support at hello@speechly.com if you have any questions!

Speechly is a YC backed company building tools for speech recognition and natural language understanding. Speechly offers flexible deployment options (cloud, on-premise, and on-device), super accurate custom models for any domain, privacy and scalability for hundreds of thousands of hours of audio.

Hannes Heikinheimo

Sep 19, 2023

1 min read

Voice chat has become an expected feature in virtual reality (VR) experiences. However, there are important factors to consider when picking the best solution to power your experience. This post will compare the pros and cons of the 4 leading VR voice chat solutions to help you make the best selection possible for your game or social experience.

Matt Durgavich

Jul 06, 2023

5 min read

Speechly has recently received SOC 2 Type II certification. This certification demonstrates Speechly's unwavering commitment to maintaining robust security controls and protecting client data.

Markus Lång

Jun 01, 2023

1 min read